believe me -it's worth it

Frugal nature: Euler and the calculus of variations

i

Frugal nature: Euler and the calculus of variations

by Phil Wilson

Dido and her lover Aeneas

Aeneas tells Dido about the fall of Troy. Baron Pierre-Narcisse Guérin.

Denied by her brother, the killer of her husband, a share of the golden throne of the ancient Phoenician city of Tyre, Dido convinces her brother's servants and some senators to flee with her across the sea in boats laden with her husband's gold. After a brief stop in Cyprus to pick up a priest and to "acquire" some wives for the men, the boats continue, rather lower in the water, to the Northern coast of Africa. Landing in modern-day Tunisia, Dido requests a small piece of land to rest on, only for a little while, and only as big as could be surrounded by the leather from a single oxhide. "Sure," the locals probably thought, "We can spare such a trifling bit of land."

Neither history nor legend recalls who wielded the knife, but Dido arranged to have the oxhide cut into very thin strips, which tied together were long enough to surround an entire hill. "That'll do nicely," we can imagine Dido thinking, and I'm sure we can all make a pretty good guess as to what the locals were thinking too. The city of Carthage was founded on this hill named Byrsa ("Oxhide"), and the civilisation it fostered became a major centre of culture and trade for 668 years until its destruction in 146 BCE, although the city lives on as a suburb of Tunis.

Celebrated in perishable poems and paintings, Queen Dido has been given more durable fame by mathematicians, who have named the following problem after her:

Given a choice from all planar closed curves [curves in the plane whose endpoints join up] of equal perimeter, which encloses the maximum area?

The Dido, or isoperimetric, problem is an example of a class of problems in which a given quantity (here the enclosed area) is to be maximised. But the Dido problem is also equivalent to asking which of all planar closed curves of fixed area minimises the perimeter, and so is an example of the more general problem of finding an extremal value — a maximum or minimum. Extremising problems have been an obsession among physicists and mathematicians for at least the last 400 years. A first mention comes from much further back: around 1700 years ago Pappus of Alexandria noted that the hexagonal honeycombs of bees hold more honey than squares or triangles would.

Extremising principles exhibit an apparent universality and deductive power that has led many otherwise rational minds to take a few nervous steps backwards and invoke a god or other mystical unifying animistic force to account for them. One such mind belonged to Leonhard Euler, the Swiss mathematician whose 300th anniversary we celebrate this year. This reaction is perhaps less surprising in Euler's case, since he entered the University of Basel at the age of 14 to study theology. Luckily for maths he spent his Saturdays hanging out with the great mathematician Johann Bernoulli.

Bernoulli was great, but Euler was greater, and his lifetime output of over 800 books and papers included the foundations of still-vital research fields today, including fluid dynamics, celestial mechanics, number theory, and topology. Another Plus article this year has documented the colourful life of this father of 13 who would knock out a paper before dinner while dangling a baby on his knee. We will focus on Euler's calculus of variations, a method applicable to solving the entire class of extremising problems.

Extreme answers to tricky questions

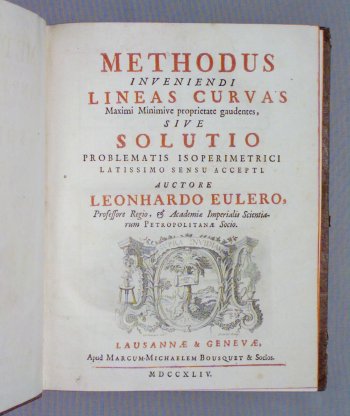

The front page of Euler's 1744 book. Image courtesy Posner Collection, Carnegie Mellon University Libraries, Pittsburgh PA, USA.

Mathematicians and scientists had been playing with the ideas which Euler systematised in his 1744 book Methodus inveniendi lineas curvas maximi minimive proprietate gaudentes, sive solutio problematis isoperimetrici lattissimo sensu accepti (A method for finding curved lines enjoying properties of maximum or minimum, or solution of isoperimetric problems in the broadest accepted sense). Indeed, Euler himself first published on this topic in 1732, when he wrote the article De linea brevissima in superficie quacunque duo quaelibet puncta jungente (On the shortest line joining two points on a surface) based on an assignment given to him by Bernoulli — persuasive encouragement to always do your homework. It was in his 1744 book, though, that Euler transformed a set of special cases into a systematic approach to general problems: the calculus of variations was born.

Euler coined the term calculus of variations, or variational calculus, based on the notation of Joseph-Louis Lagrange whose work formalised some of the underlying concepts. In their joint honour, the central equation of the calculus of variations is called the Euler-Lagrange equation. But why call it a calculus at all? What has it to do with the Newton-Leibniz differential calculus we encounter at school?

Two graphs of functions.

Figure 1: If the curve is flat, as the one shown on the top, then a small change in x corresponds to a small change in y. The steep curve below has the same change in x corresponding to a larger change in y. To find the slope at a given point, you calculate the limit of the ratios of changes in x and y as the size of the interval on the x-axis tends to zero. At the highest point of both curves — the maximum — the slope is zero.

Differential calculus is concerned with the rates of change of quantities. Take, for example, the slope of a graph representing a function of one independent variable. We might call the variable x and the function y(x). To work out the slope at a given point x, we move a little to the left of x and then a little to the right of x and measure how the value of the function changes from the leftmost position to the rightmost. The ratio of the change in function value to the change in variable value gets closer and closer to the actual slope of the function as the little bit we move to the left and right shrinks to zero. Applying this process to every point x, you end up with the function's derivative, usually written as dy/dx or y'(x).

Differential calculus enables you to find the stationary points of functions, locations at which the slope is zero; these are either extrema (maxima or minima) or inflectional points of the curve. The stationary points are the values of x which satisfy what is called an ordinary differential equation, namely dy/dx=0.

The calculus of variations also deals with rates of change. The difference is that this time you are looking at functions of functions, which are called functionals. As a simple example, think of all the non-self-intersecting curves connecting two given points in the plane. For each curve c, you can work out its length l(c) (if you know your calculus, you'll remember that this is done using integrals). If you want to know how length varies over the different curves, you treat c as a variable and the length l(c) as a function of the variable. But now c isn't as simple as our x above — it's not just a placeholder for a number, but a curve. Mathematically, a curve can be written as a function; the straight line in figure 2, for example, consists of all points with co-ordinates (x,2x). In this example the y coordinate of each point (x,y) on the line is equal to 2x, so we can express the curve as the function

y(x) = 2x. In general the length l(c) is a function of a function — a functional.

The line y = 2x.

Figure 2: The line y = 2x.

The calculus of variations enables you to find stationary points of functionals and the functions at which the extrema occur, the extremising functions. (Mathematically, the process involves finding stationary points of integrals of unknown functions.) In our example, an extremising curve would be one that maximises or minimises curve length.

It turns out that the extremising functions are those which satisfy a an ordinary differential equation, the Euler-Lagrange equation. Euler and Lagrange established the mathematical machinery behind this in a formal and systematic way and in full generality for all extremising problems. Importantly, the new systematic theory allowed for the inclusion of constraints, meaning that a whole swathe of problems in which something must be extremised while something else is kept fixed could be solved. Dido, who maximised the area for a fixed perimeter, would have been proud.

Lazy nature

Euler's foundational 1744 book is one of the first (along with the works of Pierre Louis Maupertuis) to present and discuss the physical principle of least action, indicating a deep — and controversial — connection between the calculus of variations and physics. The principle of least action can be stated informally as "nature is frugal": all physical systems — the orbiting planets, the apple that supposedly fell on Newton's head, the movement of photons — behave in a way that minimises the effort required. The word "effort" here is used in a rather vague sense — the quantity is more properly termed the action of the system. So what is this action, and what (or who, we might once have asked) does the minimising? Why should the universe be run along this parsimonious principle anyway?

Euler formulated a precise definition of the action for a body moving without resistance. Once you know what the action of a particular system is, Euler and Lagrange's calculus of variations becomes a powerful tool for deducing the laws of nature. If you know where and when the body started out and where and when it ended up, then you can use variational calculus to find the path between these endpoints that minimises action. According to the least action principle, this is the path the body must take, so the method should give you information on the fundamental laws governing the motion. If you do the calculations, you'll find out that the well-known equations of motion do indeed pop out in the end. Since Euler's time appropriate actions have been defined for all sorts of physical systems. Euler and Lagrange contributed substantially to this work, but we should also mention William Rowan Hamilton, who built on Euler's work to bring the least action principle to its modern form. Least action principles play an important role in modern physics, including the theory of relativity and, as we shall see, in quantum mechanics.

Maths and god: the limits of knowledge

The principle of least action raises two deep and unanswered issues which bump up against the limits of our knowledge and of what is knowable. It's these that have caused scientists to invoke a god as a default answer, a hypothesis which, arguably, explains nothing at best, and at worst raises more difficult questions than it answers.

The first issue is why our universe should be parsimonious. Stopping short of invoking a god as an explanation, how can we address such an issue? One way would be to ask what a universe without a least action principle would be like. Could life arise? Could explanatory theories and accurate predictions be made in such a universe? This possible line of reasoning invokes the anthropic principle, which states that naturally the universe seems explainable and cosy for life, since, if it were not, we wouldn't be around to argue about it. Unfortunately, this answer leaves our current science behind, because there is as yet no way we can test whether it is true or not.

Sun shining through clouds

Why do sunbeams travel along optimal paths?

The second issue is how the universe achieves being parsimonious. The principle of least action and variational calculus seem to suggest that the behaviour of everything in the universe is dictated by the future. A particle can only take a path of least action if it "knows" where it is going to end up — different endpoints will yield different paths. But how can that be the way the universe actually operates? How can the universe depend on knowing where the system ends up in order to work out how it got there? It doesn't make any sense ... yet the method of variational calculus works. This issue goes beyond the teleological notion that there is a plan or design in the universe. It invokes perfect knowledge of the future for the entire universe — everything is done and dusted and things like free will, morality and scientific endeavour become meaningless.

One possible solution to this problem may lie in a future understanding of how the laws of quantum mechanics — the unbelievably accurate but non-commonsensical theory of the interactions of subatomic particles — give rise to the everyday laws and concepts we see around us. Since we don't operate at the quantum mechanical length scales or time scales, we see only approximations of underlying reality. Our laws may arise from statistical correlations smoothed over innumerable interactions at the smallest scales. There is indeed a least action principle of quantum mechanics and, promisingly, it presents no teleological problems.

Putting aside unresolved philosophical questions, the calculus of variations is a technique of great power used everyday by scientists and mathematicians around the globe to solve real questions posed by the natural, industrial, and biomedical worlds. We will end with an event early in the life of its founder and birthday boy of the year, Leonhard Euler. In 1727, at the age of 20 and before formulating the calculus of variations, Euler caught the world's attention by writing a maths essay (there's hope for me yet) which received an honourable mention in the annual Grand Prix of the Paris Academy of Sciences, certainly the Nobel Prize of the day. Euler's essay was motivated by a real-world problem: how optimally to position the masts on a ship — and this from a man who had never left land-locked Switzerland, nor seen a big sailing ship. He wrote "I did not find it necessary to confirm this theory of mine by experiment because it is derived from the surest and most secure principles of mechanics, so that no doubt whatsoever can be raised on whether or not it be true and takes place in practice." Even after Newton the dangerous idea that mathematics could faithfully reproduce reality was still startling. To this day the implications of this idea are changing the world.

calculus Euler euler year anthropic principle variational calculus least action principle mathematics and religion derivative differentiation pendulum

About the author

Phil Wilson

Phil Wilson is a lecturer in mathematics at the University of Canterbury, New Zealand. He applies mathematics to the biological, medical, industrial, and natural worlds. You can read more of his inspired writing on his blog.

Download PDF version |

http://plus.maths.org/issue44/features/wilson/index.html

http://tinyurl.com/3fxgcb